Standardized Information Sharing in Supply Chain Management

Introduction:

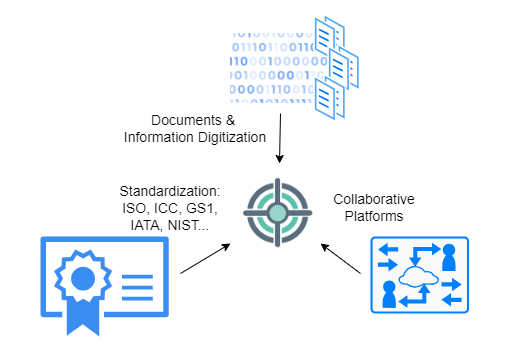

In supply chain management, effective standardized information sharing relies on three key pillars: digitization of documents and information, utilization of collaborative platforms, and standardization of documents and information formats. Each of these pillars is essential, as they collectively contribute to ensuring secure, fully digital collaborative document and information exchange capabilities.

Below are detailed descriptions of each of the pillars, along with examples of what a good framework for standardized information exchange should look like:

.

Documents & Information Digitization: Proper digitization and availability of documentation are paramount for shipments to arrive on time. While many documents are digitized, achieving a unified view of all documents in a uniform and timely manner remains a challenge:Documents Digitization:

- All documents should be converted to digital formats, including identification and classification of standardized data elements. Digitized documents and their associated digital elements should then be readily available to all parties on a need-to-know basis.

- The platform enabling access to the documents should be capable of extracting values from data elements and associating them with a shipment in the context of the document type.

Information Digitization:

- Any information related to logistics or business events of the shipment should be transformed into its digital format and associated with the shipment. Examples include user interaction with the shipment during logistics or business activities, tracker information about temperature, shock, humidity, etc., any missing steps of SOPs, and others.

Timely Digital Recording of Information:

- Minimize or eliminate delays between event occurrence and event recording by employing intelligent automation.

- Encourage and/or enforce users to interact with the platform via notifications to submit missing information.

.

Documents & Collaborative platforms Once information is digitized, procedures must be established regarding what information should be shared and who should have the privilege to view it. The process of sharing should be flexible, transparent, and, above all, secure. Below are items that need to be considered:Collaborative platforms configuration:

- Easy process to onboard and suspend users

- Define and modify users roles

- Define and remove locations

Flexible, secure, and auditable access control for granting and removing access to shared information based on:

- Granularity of the information (i.e., for luxury shipments, one can monitor conditions but not see the location; for personalized therapies, one can see all locations but not the destination, serial number of the trade, and logistic items).

- Party identity accessing shared information.

- Date, time, and geographical location of the party accessing the information.

- Type of shipment.

- Geographic location of the shipment for the entire shipping lane or just for a chosen portion of the shipping lane.

Data protection and privacy laws:

- Ability to configure data export policies compliant with data residency laws enforceable at the shipment level.

- Compliance with data protection laws, preventing unintended data disclosures.

Immutable and auditable store of records:

- Audit of critical shipment records including monitored key performance indicator (KPI) excursions, process deviations, and changes to shipping milestones.

- Security audit of records includes monitoring access granting and removal, as well as any changes related to user access permissions.

.

Standardization of Documents & Data Elements: Finally, the standardization of documents, along with the identification and classification of standardized data elements, is pivotal for achieving a consistent representation of vital information commonly found in documents used across supply chain management.Another standard related to interoperability of operational data is the GS1 Business Vocabulary, which provides standardized identifiers, codes, and attributes for describing products, locations, parties, transactions, and more.

Notable efforts by the ICC through its Digital Standards Initiative project and the GS1 Business Vocabulary lay the groundwork for standardizing document data, providing a fundamental framework for enhanced interoperability and efficiency in supply chain operations.

The International Chamber of Commerce:

- ICC-DSI publication) identified 37 different types of documents commonly used in supply chain management. The documents range from Finance & Payment to Transport & Logistics, Document of Title, Product related certificates, documents for export, import and transit, and documents related to customs

GS1:

- GS1 Core Business Vocabulary is a collection of vocabulary identifiers and definitions with the aim to support frictionless exchange of information related to supply chain management activities.

.

Conclusion:

As supply chain management becomes increasingly complex and trade volumes rise, securely and timely sharing information between various stakeholders becomes imperative. In this era of leveraging Artificial Intelligence, including machine learning and intelligent automation, the initial step is to digitize supply chain management, thereby implementing frameworks that enable data standardization and secure access between parties.

The above three pillars should serve as guidelines for supply chain management parties to understand the necessary capabilities required to enable frictionless digital collaboration.

Integrating GenAI Testing into Your System Compliance and Quality Assurance Programs

Introduction:

The early adoption of GenAI technology has been demonstrated to deliver significant value while disrupting conventional approaches to product development, testing, and approval processes. As GenAI increasingly becomes integrated into applications, there arises a need for a thorough reassessment of how testing and validation procedures for AI embedded systems should be conducted.

In this article, we delve into the perspectives of two esteemed members of KatalX: Head of Security and Systems Compliance, Mikhail Kostyukovski, and Head of QA and Automation, Martin Zakrzewski.

.

Head of Security and Systems Compliance, Mikhail Kostyukovski:

In the realm of Supply Chain Management for Life Sciences, ensuring system compliance entails adherence to regulatory standards and industry best practices. Given that our Advanced Visibility and Collaboration software is designed for global use, it is imperative that our IT systems conform to the stringent requirements set forth by regulatory bodies such as the FDA in the US and the EMA in the EU. Furthermore, compliance extends to common regulations like GDP and GMP for product manufacturing and distribution, as well as regulations governing data privacy and protection. With the increasing integration of AI into our KatalX Platform, collaboration with the Chief Product Officer and Head of Quality Assurance is paramount to ensure that AI models align with regulations and standards and undergo rigorous testing and continuous revalidation. Alongside cybersecurity considerations, the integration of GenAI necessitates the establishment of processes to ensure the secure generation of new data for the application and the implementation of continuous validation procedures.”

.

Head of QA and Automation, Martin Zakrzewski:

“Upon joining KatalX, my primary responsibility was to conduct application testing and ensure the establishment of robust processes for managing test results and associated reports. However, with the incorporation of AI elements, my role has expanded to encompass collaboration with the Head of Systems Compliance, Data Scientists, and the Chief Product Officer. Presently, our QA activities are focused on three major areas, necessitating collaboration with respective representatives from each domain:

1. Testing for security vulnerabilities and maintaining auditable records.

2. Testing for the expected output of AI models and maintaining records for continuous AI model validation.

3. Testing for expected behavior according to specifications approved by the Product Owner.

As our technology stack evolves and AI models are integrated into our applications, the role of QA is evolving into a multidisciplinary function akin to traditional Architect roles. This evolution requires expertise spanning programming, security, data science, cloud technologies, and beyond.”

Conclusion:

The integration of GenAI into system compliance and quality assurance programs represents a paradigm shift in the approach to testing and validation within the life sciences supply chain management domain. Through collaborative efforts and a commitment to adhering to regulatory standards and industry best practices, organizations like KatalX can leverage the transformative potential of GenAI while ensuring the safety, efficacy, and compliance of their products and systems.

Tom Z.,